Practical AI for Maternal Health Surveillance

August 9, 2025

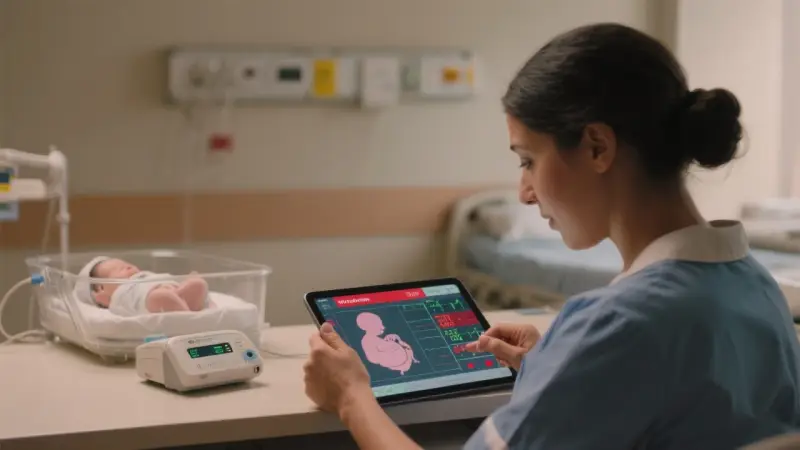

7 min read 1.5k wordsThoughtful, small‑scale AI can strengthen maternal health surveillance without overhauling systems. The goal is straightforward: spot risks and patterns earlier, close care gaps faster, and learn what’s working across facilities—while protecting privacy and dignity. Early wins come from cleaning and structuring the information already in hand, not chasing exotic models. Start with outcome definitions and workflows you can sustain; the same discipline used in AI for population health management applies here. And because findings will inform policy and program decisions, align metrics with the patient‑centered principles in choosing outcomes that matter.

Maternal health surveillance draws from case reviews, routine EHR and registry feeds, vital statistics, and community reports. Each source has strengths and blind spots. AI helps by turning unstructured notes into usable fields, flagging missing or implausible values, and surfacing time‑sensitive signals—always with a human in the loop. Pair these improvements with the ethical guardrails outlined in public health ethics in AI deployment to keep trust front and center.

Choose clear outcomes and windows

Begin with a small set of outcomes tied to decisions clinicians and public‑health teams can act on within 7–30 days. Examples:

- Severe hypertension within 10 days postpartum

- Hemorrhage requiring transfusion at delivery or within 48 hours postpartum

- Sepsis within 7 days of delivery or abortion care

- Missed postpartum visit by day 10 for high‑risk patients

- Late ANC initiation (first visit after 20 weeks) and incomplete ANC sequence

Write short, plain‑language definitions and freeze the prediction and observation windows. Avoid label leakage: do not include actions that only happen after an adverse event (e.g., rescue medication orders) in the prediction look‑back. When you later write up results for decision‑makers, mirror the clarity used in translating epidemiology for policymakers.

Put the data you already have to work

Most maternal programs already collect more data than they can use. The bottleneck is structure and signal, not volume.

- Structure free text

- Extract key fields from case narratives: timing of onset, symptoms, delays (decision, transport, care), blood pressure ranges, estimated blood loss, interventions, and outcomes. LLMs do this well when supplied with a concise schema and dozens of annotated examples.

- Normalize units and map terms to controlled vocabularies (SNOMED, LOINC). Keep a translation table for local shorthand.

- Fill obvious gaps

- Identify missing vitals/labs that should exist around key events (e.g., no postpartum blood pressure recorded within 72 hours).

- Flag inconsistent timestamps (delivery after discharge) and duplicated encounters.

- Build simple composites

- Compose indicators that mean something to clinicians: “severe range BP plus symptoms” or “rapid EBL rise with hypotension.”

- Track pathway markers: triage time to first BP, order‑to‑administration time for antihypertensives, time to transfer.

These steps mirror the pragmatic checks used in EHR data quality for real‑world evidence. Small, consistent improvements compound quickly.

A short, explainable model beats a flashy black box

When prediction is helpful—say, to prioritize postpartum outreach—prefer clarity. A calibrated logistic regression or gradient‑boosted tree with a handful of features (recent severe BP, prior preeclampsia, interpreter need, missed appointments, distance to clinic) is often enough. Focus evaluation on precision among the top N equal to outreach capacity, not only AUROC. Spread subgroup checks across race/ethnicity (when collected), language, age, payer, and neighborhood. The fairness habits in AI for population health management translate directly to surveillance use cases.

Workflows first: who acts, when, and how

The model or dashboard is not the product—workflow is. Draw the flow before you code:

- Who receives the alert or weekly list? Consider postpartum nurses, CHWs, or case managers.

- What action do they take? Call, text, schedule a blood pressure check, arrange transport, deliver a cuff, or escalate.

- What script and resources support that action? Include interpreter services and stigma‑free language.

- How long should it take? Aim for same‑day action on severe‑range blood pressure.

- How is outcome captured and fed back? Close the loop with structured reasons (reached/scheduled/declined/wrong number/needs social worker) and a place for short notes.

Embed tasks where staff already work—ideally in the EHR’s in‑basket or registry. If you use an external tool, make sign‑on seamless and push outcomes back to the record of care. For counseling workflows, align content with autonomy‑preserving principles in AI‑supported contraceptive counseling.

Governance and transparency

Publish a one‑page model card and a plain‑language explainer: what the system does, who it helps, what it does not do, and how people can raise concerns. Keep a change log for data sources and thresholds. Report monthly performance and equity metrics to a small governance group with the authority to pause or change the system—the same operational ethic outlined in public health ethics in AI deployment.

Evaluation that leaders trust

You do not need a year‑long trial to learn if the system helps. Favor designs that fit busy clinics:

- Silent run‑in: score for four weeks without action to validate prediction.

- Capacity‑constrained randomization: when you can reach only half the high‑risk list, randomize who gets outreach and compare timely postpartum BP checks.

- Staggered rollout: bring clinics on line in random order and compare early vs. later adopters.

- Interrupted time‑series: track monthly rates of severe postpartum hypertension events for 12 months before and after launch; adjust for seasonality.

Pair clinical outcomes with operational measures: contact rates, time to action, and staff experience. Present results using the concise structure in AI‑assisted evidence synthesis for policy briefs so decision‑makers see both benefits and limitations.

Equity by design, not afterthought

Surveillance must illuminate disparities and avoid reinforcing them. Practical steps:

- Stratify lists and outcomes by language, race/ethnicity (when collected), age, payer, and neighborhood deprivation.

- Monitor coverage (who appears on lists) and precision (who truly needs outreach). Over‑ or under‑selection of certain groups should trigger investigation and remedy.

- Add features that capture access barriers without stigma: interpreter need, transport benefit use, recent address changes.

- Co‑create scripts with community input. Train staff to avoid stigmatizing language (“Our records show you are high risk”) in favor of support‑oriented phrasing (“We’d like to make follow‑up easier—what would help?”).

For broader context on empowerment and respectful care, see the discussion of women’s empowerment and reproductive health in Africa, which centers agency and dignity.

Privacy, consent, and safety

Work to the minimum necessary. Separate identifiers, encrypt data, and log access. For sensitive postpartum topics, use opt‑in messaging and avoid revealing model‑driven risk. Build clear referral pathways for IPV and mental‑health concerns. Align with privacy‑first guidance in public health ethics in AI deployment and the counseling safeguards in AI‑supported contraceptive counseling.

From surveillance to learning: closing the loop

Surveillance is not only about counting events—it is a feedback engine for improvement. Use findings to:

- Adjust order sets and discharge instructions (e.g., mandatory BP cuff prescription for high‑risk patients).

- Shift clinic hours to better match patient availability.

- Standardize triage flows and escalation criteria across facilities.

- Inform pragmatic trials that evaluate specific changes at scale; see designs in pragmatic trials and RWE: better together.

Case vignette: a district’s postpartum hypertension program

Context: A district with five facilities sees rising severe postpartum hypertension events. Leaders want a lightweight system to catch issues sooner and support faster follow‑up.

Build

- Outcomes: severe BP within 10 days postpartum; missed day‑10 follow‑up among high‑risk patients.

- Data: EHR vitals and meds; discharge summaries; case review notes; transport vouchers; interpreter flags.

- Features: last two BPs, prior preeclampsia, antihypertensive prescription, interpreter need, distance to clinic, missed prenatal visits.

- Model: calibrated gradient‑boosted trees; top 30 patients per facility per week.

- Governance: model card; monthly subgroup calibration; redress channel for staff and patients.

Workflow

- Weekly lists land in the EHR registry. Nurses call with a supportive script, offer same‑day checks, and arrange transport or a loaner cuff when needed.

- CHWs conduct home BP checks for unreachable patients. Results flow back via a simple form.

- Social workers follow up on interpreter and privacy needs.

Results in three months

- Timely day‑10 BP checks increase from 42% to 67% overall; 71% among patients with interpreter need after adding interpreter‑first outreach.

- Severe postpartum hypertension events drop by 24% compared with randomized non‑contacts.

- Staff report higher confidence and lower burnout thanks to shorter, more relevant lists, a principle echoed in AI for population health management.

Implementation checklist

- Choose 2–3 outcomes with 7–30 day action windows; define them plainly.

- Map your data sources; add simple quality checks and unit normalization.

- Start with a transparent baseline model and evaluate precision at capacity‑matched cutoffs.

- Publish subgroup metrics and own an escalation plan for disparities.

- Embed tasks where staff work; provide scripts and resources.

- Document privacy choices; create opt‑in pathways for sensitive content.

- Report monthly performance and equity metrics; keep a change log.

Key takeaways

- Small, explainable tools and tight workflows beat complex systems you cannot maintain.

- Equity requires measurement, remedies, and community‑shaped scripts.

- Surveillance should feed improvement cycles and, when needed, pragmatic trials.

Sources and further reading

- World Health Organization: recommendations on antenatal, intrapartum, and postpartum care

- National and subnational maternal mortality/morbidity review committee guidance

- Method papers on calibration, subgroup performance, and fairness in clinical prediction

- Ethics resources on privacy and digital health governance